Chapter 2: Causal Estimation Techniques

2.1 Instrumental

Variables (IV)

Google Colab

2.1.1

Introduction to Instrumental Variables

Instrumental Variables (IV) stand as a pivotal econometric tool aimed at estimating causal relationships in scenarios where conducting controlled experiments is either not feasible or ethical considerations preclude their use. This comprehensive approach not only introduces the concept of IV but also elaborates on its purpose, delineates the sources of endogeneity that IV methods are designed to address, and elucidates how IV methods furnish solutions to these intricate problems.

Instrumental Variables (IV) are employed in statistical analyses to deduce causal relationships under circumstances where controlled experiments are untenable. The quintessential goal behind the utilization of IV methods is to confront and resolve endogeneity issues within econometric models, thereby facilitating a more precise and accurate inference of causality. The challenge of endogeneity emerges from various quarters, each complicating the accurate estimation of causal effects. Primarily, these sources include:

- Omitted Variable Bias: This occurs when a model does not include one or more relevant variables, leading to biased and inconsistent estimates.

- Measurement Error: Errors in measuring explanatory variables can introduce biases and inconsistencies in the estimations, distorting the true relationship between variables.

- Simultaneity: This arises when the causality between variables is bidirectional, complicating the determination of the direction of the causal relationship.

Instrumental Variables methods leverage an external source of variation that influences the endogenous explanatory variable yet remains uncorrelated with the error term within the model. By capitalizing on this external variation, IV methods adeptly navigate through the aforementioned issues of endogeneity, offering a more dependable estimation of causal effects. A notable example illustrating the application of IV is in estimating the impact of education on earnings. The selection of an instrument, such as proximity to colleges, introduces a variation in educational attainment that is exogenous to an individual’s potential earnings, thus permitting an unbiased estimation of the causal effect of education on earnings.

2.1.2 Comparing

Instrumental Variables to Randomized Controlled Trials

Randomized Controlled Trials (RCTs) are deemed the gold standard for causal inference due to their method of randomly assigning treatments to subjects, allowing for the direct observation of causal effects. This random assignment ensures that both the treatment and control groups are statistically equivalent across all characteristics, both observable and unobservable, thus providing clear and unbiased estimates of causal effects.

However, there are scenarios where RCTs are not feasible due to practical limitations, ethical concerns, or the inherent nature of the treatment variable. In such cases, Instrumental Variables (IVs) offer an alternative method for causal inference. IVs are employed when conducting controlled experiments is impractical or unethical. They rely on natural or quasi-experiments and require strong assumptions regarding the instrument’s relevance to the endogenous explanatory variable and its exogeneity to the error term.

The primary distinctions between IVs and RCTs lie in the approach to controlling for confounders. While RCTs achieve this through randomization, IV methods exploit external instruments that mimic random assignment, albeit without direct control by the researcher. This makes IVs particularly useful in situations where:

- Conducting RCTs is impractical, unethical, or excessively costly.

- The effects of variables that cannot be manipulated or randomly assigned are being studied, such as age or geographical location.

- Addressing specific endogeneity issues in observational data that RCTs cannot resolve.

Both RCTs and IVs are instrumental in the causal inference toolbox, each with its unique set of strengths and applicable scenarios. The choice between using an IV approach over RCTs hinges on the research context, the feasibility of experiments, and the nature of the variables involved.

2.1.3 The IV

Estimation Idea

The Instrumental Variables (IV) approach serves as a pivotal solution to the endogeneity problem within econometric analysis. Endogeneity often complicates causal inference, rendering conventional estimation techniques ineffective. IV estimation intervenes by utilizing an instrument—a variable that is correlated with the endogenous explanatory variables yet uncorrelated with the error term in the regression model. This unique characteristic of the instrument allows for the isolation and measurement of the causal impact of the explanatory variable on the outcome.

Conceptual Framework: At its core, IV estimation facilitates causal inference through the exploitation of variation in the explanatory variable that is directly associated with the instrument but remains independent of the confounding factors encapsulated in the error term. This methodological framework ensures that the estimated effects are devoid of bias arising from omitted variables or measurement errors, common sources of endogeneity.

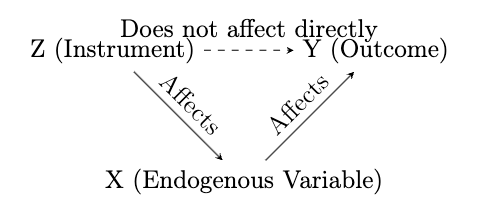

Graphical Illustration: Consider a simplified representation where:

-

denotes the instrument whose primary role is to affect the endogenous variable (

) without directly influencing the outcome variable (

).

-

represents the endogenous explanatory variable that is presumed to causally impact

, the outcome of interest.

-

The causal pathway from

to

is mediated entirely through

, underscoring the instrument’s indirect influence on the outcome.

The accompanying diagram visualizes these relationships,

highlighting the instrument’s (Z) effect on the endogenous variable

(X) and, subsequently, on the outcome variable (Y), while

emphasizing the absence of a direct link from

This section underscores the theoretical underpinnings and practical implications of IV estimation, illustrating its utility in empirical research where experimental designs are infeasible. Through this method, researchers are equipped to forge a path towards robust causal inference, navigating the challenges posed by endogenous relationships within their analytical frameworks.

2.1.4 Key

Assumptions and Conditions for Valid Instrumental Variables

Instrumental Variables (IV) estimation stands as a cornerstone econometric method for addressing endogeneity, enabling researchers to uncover causal relationships where direct experimentation is impractical. The efficacy of this approach, however, hinges on the satisfaction of several critical assumptions. These assumptions ensure that the instruments employed can legitimately serve as proxies for the endogenous explanatory variables, thereby providing unbiased and consistent estimators of causal effects.

First Assumption: Relevance The relevance condition necessitates a strong correlation between the instrument and the endogenous explanatory variable. This relationship is crucial as it underpins the instrument’s ability to meaningfully influence the endogenous variable, thereby offering a pathway to identify the causal effect of interest. Mathematically, this assumption is expressed as:

where the covariance between the instrument and the endogenous variable must be non-zero. This statistical relationship validates the instrument’s capacity to induce variations in the endogenous explanatory variable that are essential for IV estimation.

Second Assumption: Exogeneity The assumption of exogeneity asserts that the selected instrument must be uncorrelated with the error term in the regression equation. This condition is vital to ensure that the instrument does not capture any of the omitted variable biases that might otherwise contaminate the estimations. The mathematical representation of this assumption is:

where

Overidentification and Multiple Instruments In scenarios where researchers deploy multiple instruments, each instrument must independently satisfy both the relevance and exogeneity conditions. Additionally, the instruments should not be perfectly correlated with each other, ensuring that each offers distinct information about the endogenous variable. The Sargan-Hansen test provides a mechanism to test for overidentification, verifying that the instruments as a collective are valid and do not overfit the model.

Note: Adherence to these key assumptions is imperative for the integrity of IV estimation. Violations may lead to biased and inconsistent results, emphasizing the necessity for meticulous instrument selection and rigorous validation processes. The careful application of these principles ensures that IV methods yield reliable insights into causal relationships, thereby enhancing the robustness of econometric analysis.

2.1.5

Identification with Instrumental Variables

Identification plays a pivotal role in the utilization of Instrumental Variables (IV) within econometric analysis. Specifically, identification refers to the capacity to accurately estimate the causal impact of an independent variable on a dependent variable by harnessing the exogenous variation induced by the IV. This concept is foundational in ensuring that the causal inferences drawn from IV estimations are valid and reliable.

Conditions for Robust Identification: Achieving proper identification with IVs necessitates adherence to several critical conditions, each designed to validate the instrument’s effectiveness in isolating the true causal relationship:

- Instrument Exogeneity: The cornerstone of IV identification is the requirement that the instrument must not share any correlation with the error term in the regression model. This ensures that the instrument’s influence on the dependent variable is channeled exclusively through its correlation with the endogenous independent variable, thereby eliminating concerns of omitted variable bias influencing the estimates.

- Instrument Relevance: Moreover, for an IV to be considered valid, it must exhibit a strong correlation with the endogenous independent variable. This condition, known as instrument relevance, guarantees that the IV introduces sufficient exogenous variation to effectively identify the causal effect in question.

- Single Instrument Single Endogenous Variable: In scenarios involving a single instrument and a single endogenous variable, the IV methodology primarily identifies the Local Average Treatment Effect (LATE). This specific effect pertains to the subset of the population whose treatment status (i.e., the endogenous variable) is influenced by the instrument.

- Multiple Instruments: The introduction of multiple instruments potentially broadens the scope of identification beyond LATE, facilitating a more comprehensive understanding of the causal effect across different segments of the population. However, this extension is contingent upon the validity of the instruments and adherence to the above conditions.

Importance of Proper Identification: The essence of leveraging IVs in econometric analysis rests on the premise of proper identification. It is this principle that distinguishes genuine causal relationships from mere correlations or associations marred by endogeneity. Ensuring that the conditions for identification are met is not merely a technical exercise but a fundamental prerequisite for the empirical credibility of IV estimation, underscoring the nuanced complexities inherent in causal inference.

2.1.6

Two-Stage Least Squares (2SLS) Methodology

The Two-Stage Least Squares (2SLS) methodology emerges as a cornerstone technique in the realm of econometrics, specifically tailored for instrumental variables estimation to tackle the pervasive issue of endogeneity. This method delineates a systematic approach to estimating the causal impact of an independent variable on a dependent variable by leveraging an instrument that remains uncorrelated with the error term, thereby circumventing the biases associated with endogeneity.

Operational Stages of 2SLS: The implementation of 2SLS is methodically divided into two distinct stages, each serving a unique purpose in the estimation process:

- First Stage: Initially, the focus is on regressing the endogenous independent variable against the instrumental variable(s) alongside any exogenous variables present within the model. This stage is instrumental in deriving the predicted values for the endogenous variable, which are ostensibly purged of the endogeneity bias. The primary objective here is to substitute the original endogenous variable with its predictions based on the instrumental variables, ensuring these estimates are devoid of the original endogeneity concerns.

- Second Stage: Subsequently, the analysis proceeds to regress the dependent variable on the predicted values obtained from the first stage, in addition to incorporating any other exogenous variables. This crucial stage aims to quantify the causal effect of the endogenous variable on the dependent variable, now equipped with a predictor that is cleansed of endogeneity. The essence of this stage lies in its capacity to furnish an estimation of the causal relationship, effectively addressing the initial endogeneity issue.

Significance of 2SLS in Empirical Research: The advent of the 2SLS method marks a significant milestone for empirical investigations, particularly in scenarios where deploying natural experiments or conducting randomized control trials pose substantial challenges. By offering a viable and robust alternative to ordinary least squares (OLS) regression in the face of endogeneity, 2SLS enhances the reliability and validity of causal inferences drawn from econometric analyses.

Example 1: IV in Regression Analysis

This section provides a comprehensive look at employing Instrumental Variables (IV) in regression analysis to assess the causal influence of education on earnings.

Problem Definition:

-

Aim: Accurately estimate the causal effect of education (

) on earnings (

), mitigating endogeneity issues.

Regression Model:

| (1) |

Endogeneity Challenge:

-

could be endogenously correlated with

, potentially due to omitted variables, measurement error, or reverse causality, risking bias in OLS estimates.

Instrumental Variable Strategy:

-

Chosen Instrument: Proximity to the nearest college (Distance to College), presumed to affect

without directly influencing

, except via

.

Two-Stage Least Squares (2SLS) Method:

-

First Stage: Estimate

as a function of

and other exogenous variables:

(2) -

Second Stage: Regress

on the predicted values of

from Equation (2), isolating the causal effect:

(3)

Equations (2) and (3) elaborate on the 2SLS process, elucidating the mechanism to

address the endogeneity of

Conclusion: Implementing the 2SLS technique, with Distance to College as an instrumental variable, enables a more accurate estimation of the causal effect of education on earnings, effectively handling the endogeneity bias and enhancing result interpretability.

Example 2: IV in Regression Analysis

This example illustrates the use of Instrumental Variables (IV) in regression analysis to estimate the causal effect of police presence on crime rates, particularly addressing endogeneity concerns.

Problem Statement:

-

Objective: Estimate the causal impact of police presence (

) on crime rates (

).

-

Regression Model:

(4) -

Concern:

may be endogenously correlated with

, complicating causal interpretation.

Instrumental Variable Solution:

-

Proposed Instrument: Political changes, assumed to influence

police allocation and thereby

, independent of the crime rate.

Two-Stage Least Squares (2SLS) Approach:

-

First Stage: Predict

as a function of the instrumental variable and possibly other exogenous covariates.

(5) -

Second Stage: Use the predicted values of

(

) from the first stage to estimate its effect on

.

(6)

Equations (5) and (6) form the core of the 2SLS methodology, providing a framework to

estimate the causal effect of

Conclusion: The application of the 2SLS method with an appropriate instrumental variable allows for a more accurate and causally interpretable estimate of the impact of police presence on crime rates, highlighting the importance of addressing endogeneity in econometric analysis.

2.1.7 Testing

IV Assumptions, Validity, and Challenges

Instrumental variables (IV) analysis is a critical method in econometrics for addressing endogeneity issues. Testing the assumptions and validity of IVs, along with acknowledging their challenges and limitations, is essential for credible causal inference.

Testing IV Assumptions and Validity. The relevance of IVs is initially assessed through F-statistics, which help to check the strength of the instrument. A low F-statistic suggests that the instrument may be weak, potentially leading to unreliable estimates. For a more direct assessment of instrument strength, specific weak instrument tests are applied to evaluate the significant correlation between the instrument and the endogenous variable.

In addition to relevance, the exogeneity of IVs is crucial. Overidentification tests are utilized when multiple instruments are available, allowing researchers to check if the instruments are uncorrelated with the error term, thus satisfying the exogeneity condition. Moreover, Hansen’s J statistic offers a formal approach to test the overall validity of the instruments by assessing both relevance and exogeneity assumptions together.

Challenges and Limitations of Using IV. Identifying valid instruments that meet both relevance and exogeneity conditions is a practical challenge in applied research. Weak instruments, if not adequately tested, can lead to biased and inconsistent estimates, undermining the reliability of the causal inference. Even with strong instruments, incorrect model specifications or violations of the IV assumptions can result in biased estimates, highlighting the importance of rigorous testing and validation in IV analysis.

This comprehensive approach to testing IV assumptions, along with a critical understanding of the potential challenges and pitfalls, ensures the robustness and credibility of the causal inferences drawn from econometric analyses.

IV Applications in Various Fields

Economic History and Development Economics: The Long-Term Effects of Africa’s Slave Trades, Nathan Nunn, The Quarterly Journal of Economics, 2008

- Objective: Examine the impact of Africa’s slave trades on its current economic underdevelopment.

- Methodology Instrument: Use of shipping records and historical documents to estimate the number of slaves exported from each African country as an instrumental variable.

- Reason: The historical intensity of slave trades is employed to identify the causal effect on present-day economic performance, isolating the impact from other confounding factors.

- Data: Compilation of slave export estimates from various sources, including the Trans-Atlantic Slave Trade Database, and data on slave ethnicities to trace the origins of slaves.

- Results: Finds a robust negative relationship between the number of slaves exported and current economic performance, suggesting a significant adverse effect of the slave trades on economic development.

Labor Economics: Does Compulsory School Attendance Affect Schooling and Earnings?, Joshua D. Angrist and Alan B. Krueger, The Quarterly Journal of Economics, 1991

- Objective: Investigate the effect of compulsory school attendance laws on educational attainment and earnings.

- Methodology & Instrument: Utilization of quarter of birth as an instrumental variable for education, exploiting the variation in educational attainment induced by compulsory schooling laws.

- Reason: Season of birth affects educational attainment due to school entry age policies and compulsory attendance laws, providing a natural experiment for estimating the impact of education on earnings.

- Data: Analysis of U.S. Census data to examine the relationship between education, earnings, and season of birth.

- Results: The study finds that compulsory schooling laws significantly increase educational attainment and earnings, supporting the hypothesis that education has a causal effect on earnings.

Economic History and Development Economics: The Colonial Origins of Comparative Development: An Empirical Investigation, Daron Acemoglu, Simon Johnson, and James A. Robinson, American Economic Review, 2001

- Objective: Examine the effect of colonial-era institutions on modern economic performance, using European settler mortality rates as an instrument for institutional quality.

- Methodology & Instrument: Exploitation of variation in European mortality rates to estimate the impact of institutions on economic performance, with the premise that higher mortality rates led to the establishment of extractive institutions.

- Reason: High mortality rates discouraged European settlement, leading to the creation of extractive institutions in colonies, which have long-lasting effects on economic development.

- Data: Utilization of historical data on settler mortality rates, combined with contemporary economic performance indicators.

- Results: Demonstrates a significant and positive impact of institutions on income per capita, suggesting that better institutional frameworks lead to better economic outcomes.

Public Economics: Medicare Part D: Are Insurers Gaming the Low Income Subsidy Design?, Francesco Decarolis, American Economic Review, 2015

- Objective: Investigate how insurers may manipulate the subsidy design in Medicare Part D, affecting premiums and overall program costs.

- Methodology & Instrument: Analysis of plan-level data from the first five years of the program to identify pricing strategy distortions and employing instrumental variable estimates to assess the impact.

- Reason: The paper explores the strategic behavior of insurers in response to the subsidy design, aiming to uncover its implications on premium growth and program efficiency.

- Data: Utilizes plan-level data from Medicare Part D covering enrollment and prices, focusing on the largest insurers.

- Results: Finds evidence of insurers’ gaming affecting premiums and suggests modifications to the subsidy design could enhance program efficiency without compromising consumer welfare.

Media and Social Outcomes: Media Influences on Social Outcomes: The Impact of MTV’s "16 and Pregnant" on Teen Childbearing, Melissa S. Kearney and Phillip B. Levine, American Economic Review, 2015

- Objective: Evaluate the impact of MTV’s reality show "16 and Pregnant" on teen childbearing rates.

- Methodology & Instrument: Analysis of geographic variation in changes in teen birth rates related to the show’s viewership, employing an instrumental variable strategy with local area MTV ratings data.

- Reason: The reality show, depicting the hardships of teenage pregnancy, provides a natural experiment to assess media’s influence on teen behavior and decision-making regarding pregnancy.

- Data: Utilizes Nielsen ratings, Google Trends, and Twitter data to gauge the show’s viewership and its correlation with interest in contraceptive use and abortion.

- Results: The study suggests a significant reduction in teen birth rates associated with the show, indicating the potential of media to affect social outcomes by influencing public attitudes and behaviors.

2.1.8 Local

Average Treatment Effect (LATE)

The concept of the Local Average Treatment Effect (LATE) is pivotal in the context of instrumental variables (IV) analysis, particularly when addressing the issue of endogeneity in treatment assignment. LATE defines the average effect of a treatment on a specific subgroup of the population, known as compliers. These are individuals whose treatment status is directly influenced by the presence of an instrument. This selective approach enables the estimation of causal effects by focusing on the variation in treatment induced by the instrument, offering a nuanced understanding of treatment efficacy within a targeted group.

LATE is the causal effect of interest in situations where the treatment assignment is not entirely random but is instead influenced by an external instrument. This framework allows for the isolation and estimation of the treatment’s effect on compliers—those who receive the treatment due to the instrument’s influence. Such a measure is crucial in IV analysis, as it accounts for the heterogeneity in treatment response and the complexities of non-random assignment.

LATE versus ATE. The distinction between LATE and the Average Treatment Effect (ATE) is fundamental in econometric analysis. While LATE concentrates on the effect of treatment on compliers, offering insights into the impact of the treatment within a specific, instrument-influenced subgroup, ATE aims to quantify the average effect of treatment across the entire population, assuming random treatment assignment. The relevance of LATE over ATE in certain contexts arises from its ability to provide a more precise estimate of treatment effects when there is non-random assignment and endogeneity. ATE, although widely applicable, might not accurately reflect the causal relationship in scenarios where the treatment assignment is endogenously determined or correlated with potential outcomes.

Relevance in IV Analysis. The utility of LATE in IV analysis is especially pronounced. By leveraging the exogenous variation introduced by the instrument, LATE facilitates the identification of a causal effect that is more pertinent for policy analysis and decision-making. This is particularly true in cases where treatment is not randomly assigned, making LATE an indispensable tool in the econometrician’s toolkit for understanding and estimating causal relationships in the presence of complex assignment mechanisms.

This exploration into LATE underscores its significance in econometric research, highlighting the nuanced distinctions between LATE and ATE and the particular relevance of LATE in IV analysis for addressing endogeneity and non-random treatment assignment.

LATE Applications in Various Fields

Public Economics: Does Competition among Public Schools Benefit Students and Taxpayers?, Caroline M. Hoxby, American Economic Review, 2000

- Objective: Examine the impact of competition among public schools, generated through Tiebout choice, on school productivity and private schooling decisions.

- Methodology & Instrument: Utilizes natural geographic boundaries as instruments to assess the effect of school competition within metropolitan areas.

- Reason: Tiebout choice theory posits that the ability of families to choose among school districts leads to competition, potentially enhancing school efficiency.

- Data: Empirical analysis based on metropolitan area school performance data, considering factors like student achievement and schooling costs.

- Results: Finds that areas with more extensive Tiebout choice exhibit higher public school productivity and lower rates of private schooling, indicating beneficial effects of competition.

Macroeconomics and Climate Change: Temperature Shocks and Economic Growth: Evidence from the Last Half Century, Melissa Dell, Benjamin F. Jones, and Benjamin A. Olken, American Economic Journal: Macroeconomics, 2012

- Objective: Investigate the impact of temperature fluctuations on economic growth over the past half-century.

- Methodology & Instrument: Utilizes historical temperature and precipitation data across countries, analyzing their effects on economic performance through year-to-year fluctuations.

- Reason: To contribute to debates on climate’s role in economic development and the potential impacts of future warming.

- Data: Country and year-specific temperature and precipitation data from 1950 to 2003, combined with aggregate output data.

- Results: Finds that higher temperatures significantly reduce economic growth in poor countries without affecting rich countries, indicating substantial negative impacts of warming on less developed nations.

2.1.9 Advanced

IV Methods

Instrumental Variables (IV) methods are fundamental in econometrics for addressing endogeneity and establishing causal relationships. Beyond basic applications, advanced IV techniques have been developed to tackle more intricate data structures and econometric models, enhancing the robustness and applicability of causal inference.

Complex IV Approaches. The evolution of IV methodologies has led to the development of sophisticated techniques tailored for specialized econometric challenges:

- Panel Data: Advanced IV methods for panel data incorporate the longitudinal dimension of datasets, leveraging within-individual variations over time to control for unobserved heterogeneity. This approach is pivotal for studies where individual-specific, time-invariant characteristics might bias the estimated effects.

- Dynamic Models: In models characterized by dependencies between current decisions and past outcomes, dynamic IV techniques are employed to address the endogeneity arising from feedback loops. These methods utilize instruments to isolate exogenous variations, ensuring the identification of causal effects.

- Nonlinear Relationships: The extension of IV estimation to settings with nonlinear dependencies between variables necessitates the use of specialized instruments and estimation strategies. This adaptation allows for the accurate modeling of complex relationships beyond linear frameworks.

Generalized Method of Moments (GMM). A significant extension of the IV concept is embodied in the Generalized Method of Moments (GMM), a versatile tool that accommodates a wide range of econometric models:

- Overview: GMM extends the IV methodology to a broader setting, where the number of moment conditions exceeds the parameters to be estimated. This framework is particularly adept at utilizing multiple instruments to provide more efficient and reliable estimates.

- Application: GMM finds its strength in dynamic panel data models and situations with complex endogenous relationships. It capitalizes on the additional moment conditions to refine estimates and enhance the credibility of causal inferences.

- Advantages: Beyond its flexibility in model specification, GMM offers rigorous mechanisms for testing the validity of instruments through overidentification tests and provides robustness checks. This makes it an invaluable approach for empirical research facing multifaceted econometric challenges.

The advancements in IV methods, including the utilization of panel data techniques, dynamic model analysis, and the incorporation of nonlinear relationships, together with the comprehensive framework provided by GMM, represent crucial milestones in the field of econometrics. These developments enable researchers to navigate complex data structures and econometric models, paving the way for more nuanced and credible causal analyses.

2.1.10

Conclusion and Best Practices

Throughout this exploration of Instrumental Variables (IV) in econometric analysis, we have covered a broad spectrum of topics crucial for understanding and applying IV methods effectively. The rationale behind the use of IV to tackle endogeneity issues and facilitate causal inference has been a foundational theme. We delved into the selection of appropriate instruments, emphasizing the importance of their validity for the reliability of IV estimates. Moreover, the discussion extended to the application of IV methods across various econometric models, acknowledging the challenges and limitations that researchers may encounter. Advanced IV methods, including those applicable to panel data and the Generalized Method of Moments (GMM), were also highlighted, showcasing the evolution of IV techniques to address more complex data structures and econometric models.

To ensure the effectiveness and reliability of IV estimates in empirical research, several best practices have been identified. Careful instrument selection is paramount; instruments must be strongly correlated with the endogenous regressors but not with the error term to avoid biases in the estimates. Performing robustness checks, including overidentification tests and weak instrument tests, is crucial for assessing the validity and strength of the instruments. Transparent reporting is another cornerstone of credible IV analysis; researchers are encouraged to document the rationale for instrument selection, the tests conducted, and any limitations or potential biases in the analysis thoroughly. Finally, considering alternative methods for causal inference, such as difference-in-differences or regression discontinuity designs, is advisable when suitable instruments are hard to find, ensuring the robustness of the empirical findings.

This comprehensive overview and the outlined best practices serve as a guide for researchers and practitioners in the field of econometrics. By adhering to these principles, the econometric community can continue to advance the application of IV methods, enhancing the credibility and impact of empirical research in the social sciences.

2.1.11

Empirical Exercises:

Exercise 1: The Role of Institutions and Settler Mortality Google Colab

This section examines the groundbreaking work by ?. Their research investigates the profound impact of colonial-era institutions on contemporary economic performance across countries. By employing an innovative instrumental variable approach, the authors link historical settler mortality rates to the development of economic institutions and, subsequently, to present-day levels of economic prosperity.

Key Variables and Data Overview

- Dependent Variable: GDP per capita - a measure of a country’s economic performance.

- Independent Variable: Institution Quality - a proxy for the quality of institutions regarding property rights.

- Instrumental Variable: Settler Mortality - used to address the endogeneity of institutional quality by exploiting historical variations in settler health environments.

Reproduction Tasks

Reproduce Figures 1, 2, and 3, which illustrate the relationships between Settler Mortality, Institution Quality, and GDP per capita.

Estimation Tasks (first column of Table 4)

- OLS Estimation: Estimate the impact of Institution Quality on GDP per capita.

- 2SLS Estimation with IV: Use Settler Mortality as an instrumental variable for Institution Quality.

Empirical Results from the Study

Ordinary Least Squares (OLS) Regression

| (7) |

First-Stage Regression: Predicting Institutional Quality

| (8) |

Second-Stage Regression: Estimating the Impact of Institutions on Economic Performance

| (9) |

Unveiling Stories from the Data

- How does Settler Mortality relate to current GDP per capita across countries, and what might be the underlying mechanisms?

- Explore the potential indirect pathways through which Settler Mortality might affect modern economic outcomes via Institution Quality.

- Discuss how historical experiences, reflected in Settler Mortality rates, have left enduring marks on institutional frameworks.

- Analyze the empirical evidence on the role of Institution Quality in shaping economic destinies. Reflect on the difference between OLS and 2SLS estimates.

Interpreting Regression Results

- Considering the first-stage regression results, what does the coefficient of −0.61 indicate about the relationship between Settler Mortality and Institution Quality?

- How does the second-stage coefficient of 0.94 enhance our understanding of the impact of Institution Quality on GDP per capita?

- Reflect on the OLS results with a coefficient of 0.52. What does this tell us about the direct correlation between Institution Quality and GDP per capita without addressing endogeneity?

Exercise 2: The Role of Slave Trades and Current Economic Performance Google Colab

This exercise investigates the long-term impacts of Africa’s slave trades on current economic performance across nations. Utilizing a comprehensive dataset on historical slave exports, the analysis reveals a significant negative correlation between the number of slaves exported from African countries and their present-day GDP per capita. This underscores the profound and lasting economic consequences of historical slave trades, highlighting the importance of historical events in shaping modern economic landscapes.

Preliminary Analysis: Ordinary Least Squares (OLS) Regression

Before applying the two-stage least squares (2SLS) method, we start with a simple OLS regression to establish a baseline understanding of the relationship between exports per area and economic performance. The OLS equation is given by:

The regression model explores the long-term economic impacts of historical slave trades:

| (10) |

where lnyi denotes the natural log of

real per capita GDP in country i in 2000,

and ln

Tasks for OLS Analysis

- Estimate the OLS regression and interpret the coefficient β.

- Discuss the potential biases in the OLS estimate if the key independent variable is endogenous.

Two-Stage Least Squares (2SLS) Method

This exercise involves the equation:

| (11) |

which we break down into a two-stage least squares (2SLS) estimation process.

First-Stage Regression

Predict the endogenous variable using instrumental variables:

| (12) |

where Zi represents instrumental variables, Xi are control variables, and ui is the error term.

Second-Stage Regression

Substitute the predicted values from the first stage into the original equation:

| (13) |

where νi accounts for the substitution of the predicted values.

Tasks

-

Perform the first-stage regression to predict

ln

.

- Conduct the second-stage regression to estimate the causal impact of exports per area on the outcome variable yi.

- Discuss the implications of the regression coefficients and the potential endogeneity issues addressed by the 2SLS method.

- Evaluate the robustness of your 2SLS estimates by checking for the presence of weak instruments.

- Reflect on the historical context and discuss how it might have influenced the relationship between exports per area and current economic performance.

2.2

Difference-in-Differences (DID)

Google Colab

2.2.1

Introduction

The concept of Difference-in-Differences (DiD) analysis represents a significant methodological approach in the fields of econometrics and statistics, especially when the objective is to estimate the causal impact of an intervention or policy change. DiD is considered a quasi-experimental design because it does not rely on the random assignment of treatment, a condition often unattainable in real-world settings. At its core, DiD analysis compares the evolution of outcomes over time between a group that experiences some form of intervention, known as the treatment group, and a group that does not, referred to as the control group. This comparison is pivotal for discerning the effects attributable directly to the intervention, by observing how outcomes diverge post-intervention between the two groups.

The importance of DiD extends beyond its methodological elegance; it addresses a fundamental challenge in observational studies—the inability to conduct random assignments. This challenge is particularly prevalent in social sciences and policy analysis, where ethical or logistical constraints prevent experimental designs. DiD offers a robust framework for estimating causal effects in these contexts by controlling for unobserved heterogeneity that remains constant over time. Such heterogeneity might include factors intrinsic to the individuals or entities under study that could influence the outcome independently of the treatment. By comparing changes over time across groups, DiD can effectively isolate the intervention’s impact from these confounding factors.

One of the key advantages of the DiD approach lies in its ability to mitigate the effects of confounding variables that do not vary over time. In observational studies, these time-invariant unobserved factors often pose significant threats to the validity of causal inferences. By assuming that these factors affect the treatment and control groups equally, DiD allows researchers to attribute differences in outcomes directly to the intervention. This aspect is particularly crucial when analyzing the impact of policy changes or interventions in environments where controlled experiments are not feasible. Through the use of longitudinal data, DiD analysis offers a more sophisticated and reliable method for causal inference compared to simple before-and-after comparisons or cross-sectional studies, which do not account for unobserved heterogeneity in the same manner.

In summary, the Difference-in-Differences analysis stands as a critical tool in the econometrician’s and statistician’s toolkit, offering a pragmatic solution for estimating causal relationships in the absence of randomized control trials. Its application spans a wide array of disciplines and contexts, from public policy to health economics, highlighting its versatility and effectiveness in contributing to evidence-based decision-making.

2.2.2 Key

Concepts in DiD

In the realm of Difference-in-Differences (DiD) Analysis, understanding the foundational concepts is paramount for accurately estimating the causal effects of interventions or policy changes. These foundational concepts include the delineation of treatment and control groups, the distinction between pre-treatment and post-treatment periods, and the critical assumption of parallel trends. Each concept plays a vital role in the validity and reliability of DiD analysis.

Treatment and Control Groups form the cornerstone of any DiD analysis. The Treatment Group receives the intervention or is subjected to the policy change under investigation, while the Control Group does not receive the treatment and serves as a baseline for comparison. The comparability of these two groups is crucial for a valid DiD analysis.

Pre-Treatment and Post-Treatment Periods are delineated to capture the temporal dynamics of the intervention, comparing changes in outcomes between these periods across both groups to discern the causal impact of the intervention.

The Parallel Trends Assumption presupposes that, in the absence of the treatment, the outcomes for both the treatment and control groups would have progressed parallelly over time. This assumption is essential for attributing observed changes in outcomes directly to the treatment effect.

2.2.3

Theoretical Framework

The Difference-in-Differences (DiD) estimator plays a pivotal role in econometric analysis, allowing researchers to estimate the causal effect of an intervention or policy change. The mathematical formulation of the DiD estimator is essential for delineating the causal impact of such interventions in observational data.

The DiD estimator can be mathematically represented as follows:

| (10) |

In this equation, ΔY signifies the estimated treatment effect. The terms ȲT1 and ȲT0 represent the average outcomes for the treatment group after and before the treatment, respectively. Similarly, ȲC1 and ȲC0 denote the average outcomes for the control group in the post-treatment and pre-treatment periods, respectively. This formulation captures the change in outcomes over time, isolating the effect of the intervention by comparing these changes between the treatment and control groups.

To further elucidate this concept, consider the four key outcomes to understand DiD, presented in the table below:

| Before Treatment (t = 0) | After Treatment (t = 1) | |

| Control Group | ȲC0 | ȲC1 |

| Treatment Group | ȲT0 | ȲT1 |

The DiD estimate, calculated from Equation 10, is thus given by:

The alignment of notation between the equation and the table ensures a coherent and straightforward interpretation of the DiD analysis. This standardized approach facilitates a more intuitive understanding of how the DiD estimator quantifies the causal effect by comparing the differential changes in outcomes between the control and treatment groups across the two periods.

2.2.4

Assumptions Behind DiD

The Difference-in-Differences (DiD) methodology relies on several critical assumptions to ensure the validity of its estimates. Understanding these assumptions is essential for both conducting DiD analyses and interpreting their results.

- Parallel Trends Assumption: This assumption is fundamental to DiD analyses. It posits that, in the absence of the intervention, the difference in outcomes between the treatment and control groups would have remained constant over time. For this assumption to hold, it is necessary that the pre-treatment trends in outcomes are parallel between the treatment and control groups. This parallelism ensures that any deviation from the trend post-intervention can be attributed to the intervention itself rather than pre-existing differences.

-

Other Critical Assumptions:

- No Spillover Effects: It is assumed that the treatment applied to the treatment group does not influence the outcomes of the control group. This ensures that the observed effects are solely attributable to the treatment and not external influences on the control group.

- Stable Composition: The composition of both the treatment and control groups should remain stable over the study period. Significant changes in group composition could introduce biases that affect the outcome measures.

- No Simultaneous Influences: The analysis assumes that there are no other events occurring simultaneously with the treatment that could impact the outcomes. Such events could confound the treatment effects, making it difficult to isolate the impact of the intervention.

-

Testing Assumptions:

- The parallel trends assumption can be examined by analyzing the pre-treatment outcome trends between the groups. This helps to validate the assumption that the groups were on similar trajectories prior to the intervention.

- Conducting robustness checks and sensitivity analyses is crucial for assessing the DiD estimates’ stability against these assumptions. Such analyses help to affirm that the findings are not unduly influenced by violations of the assumptions.

2.2.5

Implementing DiD Analysis

Implementing a Difference-in-Differences (DiD) analysis involves a systematic approach to ensure the accuracy and validity of the estimated treatment effects. This section outlines a step-by-step guide for conducting a DiD analysis and highlights the importance of careful selection of control and treatment groups, as well as the preparation and analysis of data.

- Define the Intervention: Begin by clearly specifying the intervention or policy change under investigation. This includes understanding the nature, timing, and target of the intervention.

- Select Treatment and Control Groups: Identify the groups that did and did not receive the intervention. It is crucial that these groups are comparable in aspects that are fixed over time or unaffected by the treatment to ensure the validity of the DiD estimates.

- Collect Data: Gather data for both the treatment and control groups for adequate periods before and after the intervention. This longitudinal data collection is essential for assessing the impact of the intervention.

- Verify Assumptions: Prior to estimation, check for the parallel trends assumption and other critical assumptions necessary for a valid DiD estimation. This step is crucial for affirming the methodological foundations of your analysis.

- Estimate the DiD Model: Utilize statistical software to estimate the DiD model. This process involves computing the difference in outcomes before and after the intervention between the treatment and control groups, thereby obtaining the treatment effect.

- Conduct Robustness Checks: To ensure the reliability of your findings, perform additional analyses to test the sensitivity of your results to different model specifications, sample selections, or the inclusion of various covariates.

2.2.5.1

Choice of Control and Treatment Groups

The selection of control and treatment groups is pivotal for the validity of DiD estimates. These groups should be similar in characteristics that are fixed over time or unaffected by the treatment. Any significant pre-existing differences between the groups can bias the estimated treatment effect, undermining the credibility of the analysis.

2.2.5.2 Data

Requirements and Preparation

Adequate data collection and preparation are foundational to conducting DiD analysis:

- Collect data on the outcomes of interest for both groups, before and after the intervention. This longitudinal data is critical for assessing the impact of the intervention over time.

- Ensure that the data is cleaned and prepared to ensure consistency and accuracy across all observations. Inconsistent or inaccurate data can lead to erroneous conclusions.

- Consider potential covariates that might influence the outcomes of interest and need to be controlled for in the analysis. Including relevant covariates can help improve the precision of the estimated treatment effect and mitigate potential confounding factors.

2.2.6

Difference-in-Differences (DiD) with

Regression Equations

The Difference-in-Differences (DiD) approach is a pivotal econometric technique used to estimate the causal effect of a policy intervention or treatment. This method relies on a basic regression equation, given by:

| (11) |

where Y it represents the outcome for individual i at time t. The variable Treati indicates whether the individual is in the treatment group (1 if treated, 0 otherwise), and Aftert denotes the time period (1 if after the treatment has been applied, 0 otherwise). The coefficient δ is of particular interest as it captures the causal effect of the treatment, quantified by the interaction of the treatment and time indicators. The error term is represented by 𝜖it.

The coefficients within this regression model carry specific interpretations. The intercept α denotes the baseline outcome when there is no treatment and the observation is from the pre-treatment period. The coefficient β1 measures the difference in outcomes between the treatment and control groups before the application of the treatment, while β2 captures the time effect on outcomes irrespective of the treatment. The DiD estimator, δ, quantifies the additional effect of being in the treatment group after the treatment, essentially isolating the treatment’s impact from other time-related effects.

A statistically significant δ provides robust evidence of the treatment’s causal impact on the outcome variable. The interpretation of δ is central to the DiD methodology, highlighting its utility in assessing policy effectiveness and interventions in a variety of contexts.

2.2.7 DiD with

Multiple Time Periods

Extension to Multiple Periods: Traditional Difference-in-Differences (DiD) analysis compares two groups over two time periods. Extending this to multiple periods enables a more nuanced examination of treatment effects over time, including identifying any delayed effects or changes in impact across different periods.

Two-Way Fixed Effects Models: To incorporate multiple time periods, DiD analysis can be extended using two-way fixed effects models. These models account for both entity-specific and time-specific unobserved heterogeneity. The general equation for such models is as follows:

| (12) |

Here,

Advantages of Multiple Periods: Utilizing multiple periods in DiD analysis has several advantages. It allows for the employment of more complex models to better understand the dynamics of treatment effects, improves the precision of estimated treatment effects by leveraging more data points, and facilitates a more rigorous testing of the parallel trends assumption across multiple pre-treatment periods.

2.2.8 Dynamic

Difference-in-Differences (Dynamic DiD)

In the realm of econometric analysis, the Dynamic Difference-in-Differences (Dynamic DiD) methodology represents an advanced extension of the traditional DiD approach. It is specifically designed to investigate the effects of interventions or policies over multiple time periods, thereby offering a nuanced understanding of how treatment effects evolve both before and after the implementation of the intervention. This model is particularly beneficial for analyzing policies or treatments whose effects are not static but vary over time.

The core of the Dynamic DiD analysis is encapsulated in its regression equation, which is formulated to capture the dynamic nature of treatment effects comprehensively. The regression model is given by:

| (13) |

where

Through its elaborate and carefully constructed framework, the Dynamic DiD model provides researchers with a powerful tool for dissecting the temporal dynamics of treatment effects, ensuring precise and accurate insights into the efficacy of policy interventions.

2.2.9 Common

Pitfalls in DiD Analysis

In the realm of econometric analysis, particularly within the Difference-in-Differences (DiD) framework, researchers often encounter several common pitfalls that can compromise the validity of their findings. Recognizing and addressing these pitfalls is crucial for conducting robust and reliable econometric analyses.

Violation of Parallel Trends Assumption: At the core of DiD analysis lies the parallel trends assumption. This assumption posits that, in the absence of the treatment, the difference in outcomes between the treatment and control groups would remain constant over time. However, this assumption can be violated if external factors affect the groups differently, leading to diverging trends. Researchers must be vigilant for such violations, which can be tested through examining pre-treatment trends or employing placebo tests.

Dealing with Dynamic Treatment Effects: Another significant challenge arises when treatment effects evolve over time. It is not uncommon for immediate effects to differ from long-term effects, necessitating sophisticated modeling and interpretation. Strategies to manage dynamic treatment effects include utilizing event study designs or specifying models that accommodate dynamic effects. It is imperative to select a method that accurately captures the treatment’s temporal dynamics without introducing bias or misinterpreting the effects.

These pitfalls underscore the importance of rigorous methodological approaches and the need for critical analysis in DiD studies. By carefully testing assumptions and appropriately modeling dynamic effects, researchers can enhance the credibility and reliability of their econometric analyses.

DiD Application in Various Fields

Labor Economics: Minimum Wages and Employment: A Case Study of the Fast-Food Industry in New Jersey and Pennsylvania, David Card and Alan B. Krueger, American Economic Review, 1994

- Objective: Explore the impact of the minimum wage increase in New Jersey on employment within the fast-food industry, compared to Pennsylvania where the minimum wage remained constant.

- Methodology & Instrument: Conducted surveys of fast-food restaurants in both states before and after the wage increase to assess changes in employment levels.

- Reason: To challenge the conventional economic theory predicting that increases in minimum wage lead to employment reductions.

- Data: Gathered data from 410 fast-food restaurants, analyzing employment changes in response to the wage adjustment.

- Results: Found no significant reduction in employment in New Jersey post-wage increase, suggesting that higher minimum wages do not necessarily harm employment levels.

Urban Economics: The Effects of Rent Control Expansion on Tenants, Landlords, and Inequality: Evidence from San Francisco, Rebecca Diamond, Tim McQuade, Franklin Qian, American Economic Review, 2019

- Objective: Examine the impacts of rent control expansion in San Francisco on tenants, landlords, and city-wide inequality.

- Methodology & Instrument: Exploits quasi-experimental variation from a 1994 law change to study rent control effects, using detailed data on migration and housing.

- Reason: To understand how rent control affects tenant mobility, landlord responses such as reductions in rental supply, and overall market rents and inequality.

- Data: Utilizes new microdata tracking individual migration and housing characteristics, focusing on the effects of the 1994 rent control law.

- Results: Finds rent control increases tenant stability but leads landlords to decrease rental housing supply, likely driving up market rents and contributing to inequality in the long run.

Development Economics: A Matter of Time: An Impact Evaluation of the Brazilian National Land Credit Program, Steven M. Helfand, Vilma H. Sielawa, Deepak Singhania, Journal of Development Economics, 2019

- Objective: Evaluate the Programa Nacional de Crédito Fundiário’s impact on agricultural production and earned income in Brazil.

- Methodology & Instrument: Difference-in-differences model with either municipal or individual fixed effects, using a panel dataset and a pipeline control group.

- Reason: To analyze how market-assisted land reform influences rural poverty reduction and economic development.

- Data: Panel data from 2006 to 2010 of beneficiaries randomly selected from program participants and a control group from the program’s pipeline.

- Results: Indicates significant increases in production and income by about 74

Health Economics and Policy: Education and Mortality: Evidence from a Social Experiment, Costas Meghir, Mårten Palme, and Emilia Simeonova, American Economic Journal: Applied Economics, 2018

- Objective: Analyze the long-term health consequences of Sweden’s increase in compulsory schooling years through a major educational reform.

- Methodology & Instrument: Utilizes the gradual implementation of the reform across municipalities as a natural experiment to assess impacts on mortality and health.

- Reason: To establish a causal link between increased education and health outcomes, challenging conventional correlations between socioeconomic status and health.

- Data: Comprehensive register data including mortality, hospitalizations, and prescription drug consumption for about 1.5 million individuals born between 1940 and 1957.

- Results: Finds no significant impact of the reform on life expectancy or health outcomes, despite increasing educational attainment.

Health Policy: Four Years Later: Insurance Coverage and Access to Care Continue to Diverge between ACA Medicaid Expansion and Non-Expansion States, Sarah Miller and Laura R. Wherry, AEA Papers and Proceedings, 2019

- Objective: Examine the long-term impact of ACA Medicaid expansions on insurance coverage, access to care, and financial strain among low-income adults.

- Methodology: Uses National Health Interview Survey data and an event-study framework to compare outcomes between expansion and non-expansion states from 2010 to 2017.

- Reason: To understand the lasting effects of ACA Medicaid expansions on health insurance coverage and healthcare access.

- Data: Analyzed survey data covering a period of eight years to assess changes in insurance coverage, access to medical care, and financial stress due to medical bills.

- Results: Found significant improvements in insurance coverage and access to care in expansion states, with reductions in financial strain, but no strong evidence of changes in health outcomes.

2.2.10 Triple

Differences

The Triple Differences (TD) method extends the traditional Difference-in-Differences (DiD) approach by introducing an additional dimension to the analysis. This method is particularly useful for isolating and examining the effects of a treatment across different subgroups or time periods, beyond the basic treatment and control group comparison.

Regression Equation: The foundational equation for the TD analysis can be represented as follows:

|

In this model, the coefficient

Application Example: An illustrative

application of the TD method can be seen in the evaluation of an

educational policy aimed at improving student outcomes. Consider a

scenario where the treatment group consists of schools implementing

a new teaching method, contrasted with a control group of schools

that continue with traditional methods. An additional dimension in

this analysis is the socio-economic status (SES) of the school

district, which is categorized into high or low SES. The objective

is to ascertain whether the policy’s effect varies not only before

and after its implementation but also across districts with

differing SES levels. A significantly positive

This example highlights the TD method’s capacity to uncover differential impacts of policies or treatments, facilitating a more granular understanding of their effectiveness across various segments or conditions.

Triple Differences Application in Various Fields

Environmental Economics: Heat Exposure and Youth Migration in Central America and the Caribbean, Javier Baez, German Caruso, Valerie Mueller, and Chiyu Niu, American Economic Review, 2017

- Objective: Analyze how heat exposure influences youth migration decisions in Central America and the Caribbean.

- Methodology & Instrument: The study employs a triple difference-in-difference quasi-experimental design to examine the migration response to temperature extremes.

- Reason: To understand environmental drivers of migration, particularly in the context of climate change.

- Data: Uses census data across several countries in the region to track inter-province migration patterns related to climate variables.

- Results: Identifies significant migration patterns among youth in response to heat exposure, contributing to discussions on climate adaptation strategies.

Labor Market Dynamics: The Demand for Hours of Labor: Direct Evidence from California, Daniel S. Hamermesh, Stephen J. Trejo, Review of Economics and Statistics, 2000

- Objective: Examine the effect of California’s policy requiring overtime pay for work beyond eight hours in a day, extended to men in 1980, on the labor market.

- Methodology: Analyzes data from the Current Population Survey (CPS) between 1973 and 1991 to evaluate changes in daily overtime work patterns among California men compared to men in other states.

- Reason: To assess how overtime pay regulation affects employment practices and workers’ hours.

- Data: Utilized CPS data focusing on changes in work hours before and after the policy change.

- Results: Found that the overtime regulation significantly reduced the amount of daily overtime worked by men in California compared to other states, indicating a strong response to the policy in terms of reduced overtime hours.

2.2.11

Synthetic Control Methods

Synthetic Control Methods represent a sophisticated approach in the econometrics toolbox, especially valuable in comparative case studies where traditional control groups may not be feasible. This method involves creating a weighted combination of control units to construct a "synthetic control." The synthetic control aims to closely approximate the characteristics of a treated unit before the intervention, offering a novel way to estimate the counterfactual—what would have happened in the absence of the intervention.

Key Features:

- The primary objective is to construct a counterfactual that can accurately estimate the intervention’s effect. This is achieved by selecting a combination of predictors and control units that best replicate the pre-treatment characteristics of the treated unit.

- It significantly enhances causal inference in studies characterized by a small number of units and situations where randomization is not feasible.

Advantages:

- Precision: By tailoring the synthetic control to match specific characteristics of the treated unit, this method improves the accuracy of the estimation.

- Flexibility: Its application is not limited to a single field or type of intervention, making it a versatile tool in empirical research.

- Transparency: The process of constructing the synthetic control is explicit, enhancing the clarity of interpretation and facilitating validation of the results.

Application Example: Consider the evaluation of the economic impact of a new tax policy introduced in a specific region. By comparing the post-intervention economic indicators of the region with a synthetic control, which is constructed from a combination of regions not affected by the policy, researchers can isolate and assess the policy’s true impact. This method allows for a nuanced analysis that accounts for the complex interplay of various factors influencing the outcome, providing a robust framework for causal inference in policy evaluation.

This section encapsulates the essence of Synthetic Control Methods, delineating its methodology, utility, and application in a manner that is accessible and informative for students pursuing advanced studies in econometrics.

Synthetic Control Application in Various Fields

Public Economics and Debt Relief: Borrowing Costs after Sovereign Debt Relief, Valentin Lang, David Mihalyi, and Andrea F. Presbitero, American Economic Journal: Economic Policy, 2023

- Objective: Examine the effects of the Debt Service Suspension Initiative (DSSI) on sovereign bond spreads and assess whether debt moratoria can aid countries during adverse conditions.

- Methodology & Instrument: Employs synthetic control and difference-in-differences methods using daily data on sovereign bond spreads, comparing DSSI-eligible countries to similar ineligible ones.

- Reason: To understand the bond market reactions to official debt relief and address concerns regarding potential stigma effects.

- Data: Daily sovereign bond spread data, alongside macroeconomic indicators.

- Results: Finds that countries eligible for the DSSI experienced significant declines in borrowing costs, suggesting positive liquidity effects of the initiative without the feared market stigma.

2.2.12

Summarizing Key Insights

This section concludes our exploration of advanced econometric methods, with a particular focus on the Difference-in-Differences (DiD) approach, its extensions, and applications. Below, we summarize the critical insights garnered from our discussions:

- Foundation of DiD: DiD stands as a robust framework for estimating causal effects within observational data. It addresses the limitations inherent in traditional comparative analyses by controlling for unobserved, time-invariant differences between the treatment and control groups, thereby enhancing the credibility of causal inference.

- Parallel Trends Assumption: The efficacy of DiD analysis hinges on the parallel trends assumption, which requires that, in the absence of treatment, the difference between treatment and control groups would remain constant over time. This assumption is critical and necessitates thorough pre-analysis checks and the careful selection of control and treatment groups to ensure validity.

- Methodological Extensions: Extensions to the basic DiD framework, such as Triple Differences and Synthetic Control Methods, offer sophisticated tools for dealing with more complex scenarios. These extensions allow for a more nuanced understanding of policy impacts, accommodating situations where traditional DiD assumptions may not hold.

- Versatility across Fields: Through examples from labor, urban, health, and development economics, DiD analysis demonstrates its versatility as a tool in economic research. It has proven capable of uncovering the nuanced effects of interventions across a variety of contexts.

- Value in Empirical Analysis: The adaptability of DiD methodology across different domains highlights its invaluable contribution to empirical analysis. It pushes the boundaries of our understanding in economic policy and beyond, offering a powerful lens through which to examine the causal impact of interventions.

These insights underscore the significance of DiD and its extensions in the field of econometrics. By providing a framework for rigorous causal analysis, DiD methods enable researchers to draw more accurate conclusions about the effects of policies and interventions, thereby contributing to more informed decision-making in policy and practice.

2.2.13

Empirical Exercises:

Google Colab

Exercise 1: Effects of Rent Control Expansion

This section investigates the study by Diamond, McQuade, and Qian (2019), which examines the effects of rent control expansion on tenants, landlords, and housing inequality in San Francisco. The research utilizes a natural experiment stemming from a 1994 ballot initiative that extended rent control to smaller multi-family buildings constructed prior to 1980, offering a unique opportunity to study the policy’s impact.

Key Variables and Data Overview

- Dependent Variables: Tenant mobility, landlord responses (e.g., building conversions), and housing market dynamics.

- Independent Variable: Rent control status, determined by the building’s construction date relative to the 1994 law change.

- Quasi-Experimental Design: Comparison between buildings constructed just before and just after the 1980 cutoff, serving as a natural experiment.

Reproduction Tasks

Recreate the analysis delineating the effects of rent control on tenant stability, landlord behaviors, and the broader housing market in San Francisco.

Estimation Tasks

- Difference-in-Differences (DiD) Analysis: Evaluate the impact of rent control on tenant mobility and landlord decisions.

- Geographic Distribution Analysis: Analyze the spatial distribution of treated and control buildings to understand the policy’s citywide implications.

Regression Equations

Tenant Mobility and Landlord Responses

| (14) |

| (15) |

Effects on Inequality

| (16) |

Difference-in-Differences Analysis

| (17) |

Notes: This equation represents the difference-in-differences analysis used in the study to evaluate the impact of rent control on tenant stability. Y iszt denotes the outcome of interest for individual i, in state s, at time t, with treatment status Ti, where δzt captures time and location fixed effects, αi captures individual fixed effects, βt represents the effect of the rent control treatment, and γst represents state-time interactions.

Empirical Results from the Study

Tenant Mobility

The study finds that rent control significantly reduces tenant mobility, locking tenants into their apartments and preventing displacement.

Landlord Responses

Landlords of rent-controlled buildings are more likely to convert these buildings into condos or redevelop them, reducing the supply of rental housing.

Housing Market Dynamics

The reduction in rental housing supply leads to higher rents in the long term, counteracting the intended goals of rent control policies.

Analyzing the Impact

- Discuss how rent control policies affect tenant decisions to move or stay.

- Examine the responses by landlords to rent control and its implications for the rental market and housing inequality.

- Reflect on the broader economic and social implications of rent control on San Francisco’s housing market.

Interpreting Regression Results

Consider the study’s findings and discuss the implications of rent control policies on tenants, landlords, and the overall housing market dynamics in urban settings.

2.3 Regression

Discontinuity Design (RDD)

Google Colab

2.3.1

Introduction to Regression Discontinuity

Design

Regression Discontinuity Design (RDD) is a quasi-experimental pretest-posttest design that stands out in the landscape of econometric analysis for its unique approach to causal inference. Unlike traditional experimental designs that rely on random assignment to treatment and control groups, RDD exploits a predetermined cutoff point. This methodological innovation makes RDD particularly valuable in fields where conducting controlled experiments is either impractical or unethical, such as in economics, education, and public policy.

At its core, RDD enables researchers to estimate the causal effects of interventions by observing units just above and below a clearly defined threshold. This design is predicated on the assumption that units on either side of the cutoff are comparable, thereby allowing for a credible estimation of the treatment effect. The importance of RDD in causal inference arises from its ability to provide robust estimates of causal effects in situations where randomization is not possible. It has become an indispensable tool for policy evaluation, especially when policy eligibility is determined by specific criteria or thresholds.

Over time, RDD has evolved significantly, moving beyond simple comparisons across a threshold to incorporate more complex forms, such as fuzzy design, which deals with imperfect compliance, and kernel-based estimation, which improves the precision of estimates. The methodology has also expanded to consider multiple cutoffs and dimensions, broadening its applicability and enhancing its utility in empirical research. This evolution reflects the adaptability of RDD to the complexities of real-world data and underscores its critical role in the econometrician’s toolkit.

2.3.2

Theoretical Foundations of Regression

Discontinuity Design

The theoretical underpinnings of Regression Discontinuity Design (RDD) are essential for understanding its role in causal inference. The identification strategy of RDD hinges on the comparison of observations infinitesimally above and below a predetermined cutoff point. This approach is predicated on the assumption that units immediately on either side of the cutoff are comparable in all aspects other than the treatment received. Such a condition facilitates a credible estimation of the treatment effect, leveraging the abrupt change at the cutoff as a natural experiment.

RDD’s methodology aligns closely with the principles outlined in the Rubin Causal Model (RCM) framework. The RCM emphasizes the significance of potential outcomes in estimating causal effects and considers the creation of a credible counterfactual scenario as pivotal. In the context of RDD, the cutoff point effectively generates this counterfactual scenario, where units just below the cutoff serve as a stand-in for what would have happened to the treated units had they not received the treatment. This alignment with RCM principles fortifies RDD’s credibility as a tool for causal inference, especially in settings where randomized controlled trials are not feasible.

An important concept within RDD, particularly in its fuzzy variant, is the Local Average Treatment Effect (LATE). LATE represents the causal effect of the treatment on units at the cutoff point, acknowledging that not all units might comply with the treatment assignment based on the cutoff criterion. This consideration is crucial in fuzzy RDD, where treatment application is imperfect, making LATE a more accurate representation of the treatment effect for the subset of units—referred to as compliers—that adhere to the assignment rule. The delineation of LATE underscores RDD’s flexibility in accommodating real-world complexities in treatment assignment, further enhancing its applicability across various research domains.

2.3.3

Comparative Analysis of Sharp vs. Fuzzy

Regression Discontinuity Design

Regression Discontinuity Design (RDD) is an econometric methodology pivotal for causal inference, delineated into two principal variants: Sharp RDD and Fuzzy RDD. These variants cater to differing scenarios of treatment assignment and compliance, each providing unique methodological insights into the estimation of causal effects.

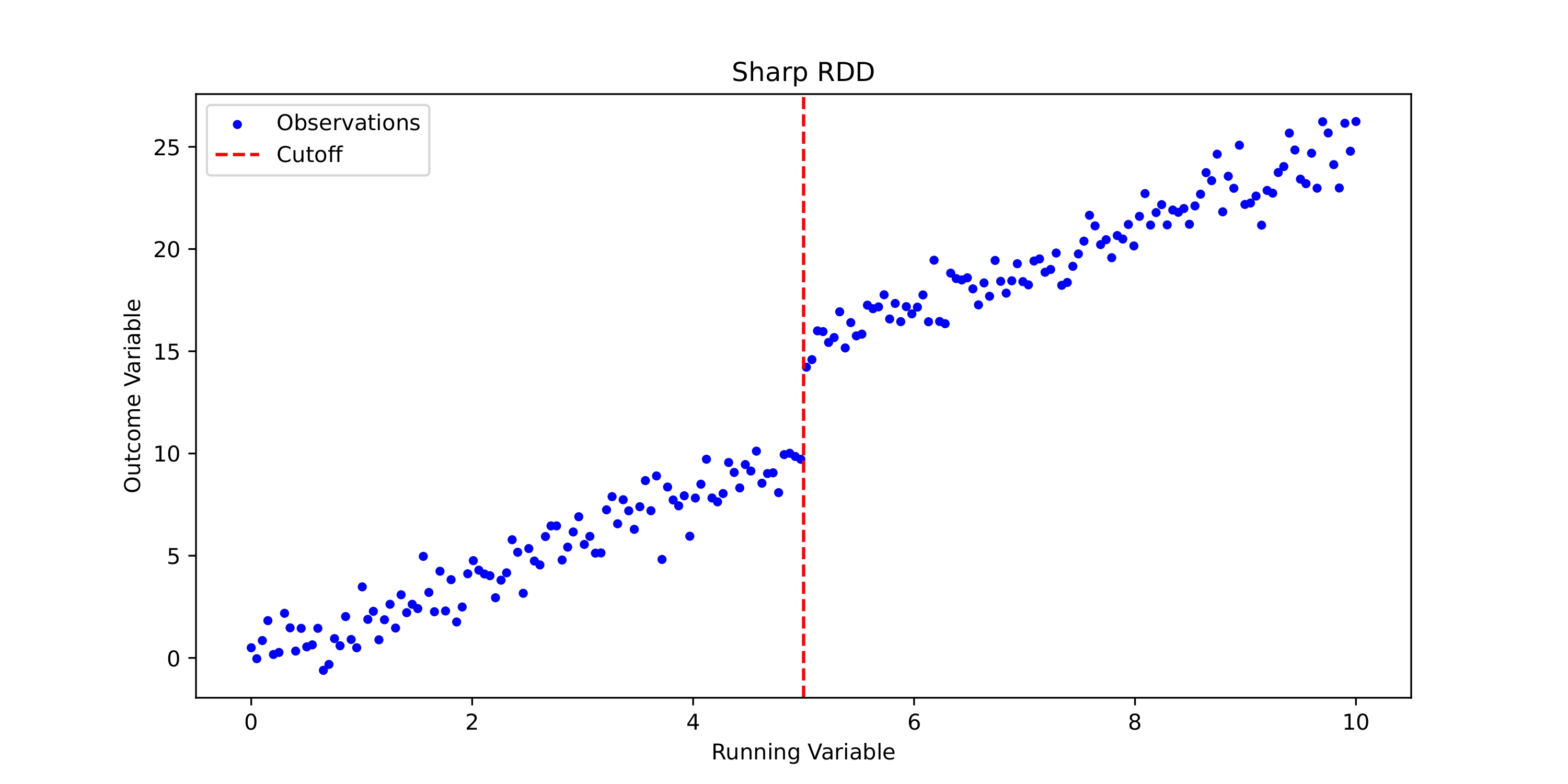

Sharp RDD: Sharp RDD is characterized by a distinct discontinuity at a predetermined cutoff point, which cleanly separates the treatment group from the control group under the premise of perfect compliance. This means that all units above the cutoff are unequivocally treated, whereas those below it are not. Such clarity in treatment assignment, depicted in Figure 1, makes Sharp RDD particularly suited to scenarios where the treatment assignment criterion is based strictly on the running variable, allowing for an unambiguous estimation of the treatment effect.

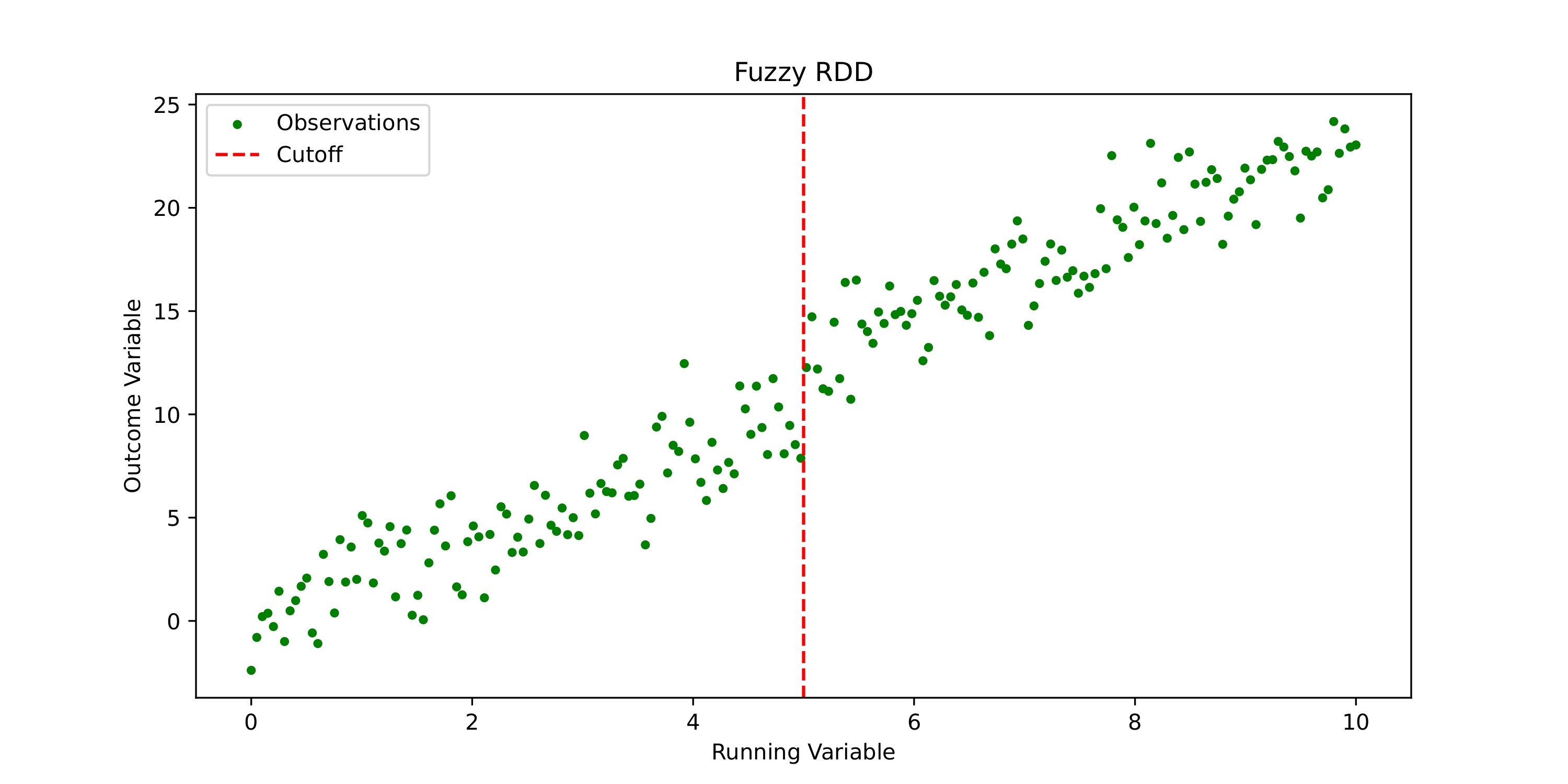

Fuzzy RDD: In contrast, Fuzzy RDD deals with scenarios where compliance with the treatment assignment is imperfect. This means not all units above the cutoff necessarily receive the treatment, and similarly, some units below it might be treated. This variability in treatment assignment leads to a smoother transition around the cutoff, as illustrated in Figure 2, and is emblematic of real-world conditions where strict adherence to the assignment rule is not feasible.

Key Considerations:

- The cutoff point plays a pivotal role in both Sharp and Fuzzy RDD, serving as the demarcation line for treatment and control groups. The clarity of this cutoff in Sharp RDD and its more nuanced interpretation in Fuzzy RDD are crucial for the identification of causal effects.

- Sharp RDD is favored when treatment assignment can be clearly and unequivocally determined by the running variable, as visually represented in Figure 1.